Learning from People, Integrating Robots Into Unstructured Environments

Until recently, most robots that have been created are pre-programmed industrial robots that execute repetitive activities in structured, controlled environments where few to no humans also work like manufacturing factories. The goal of industrial robots is to automate the work in place of humans having to do it.

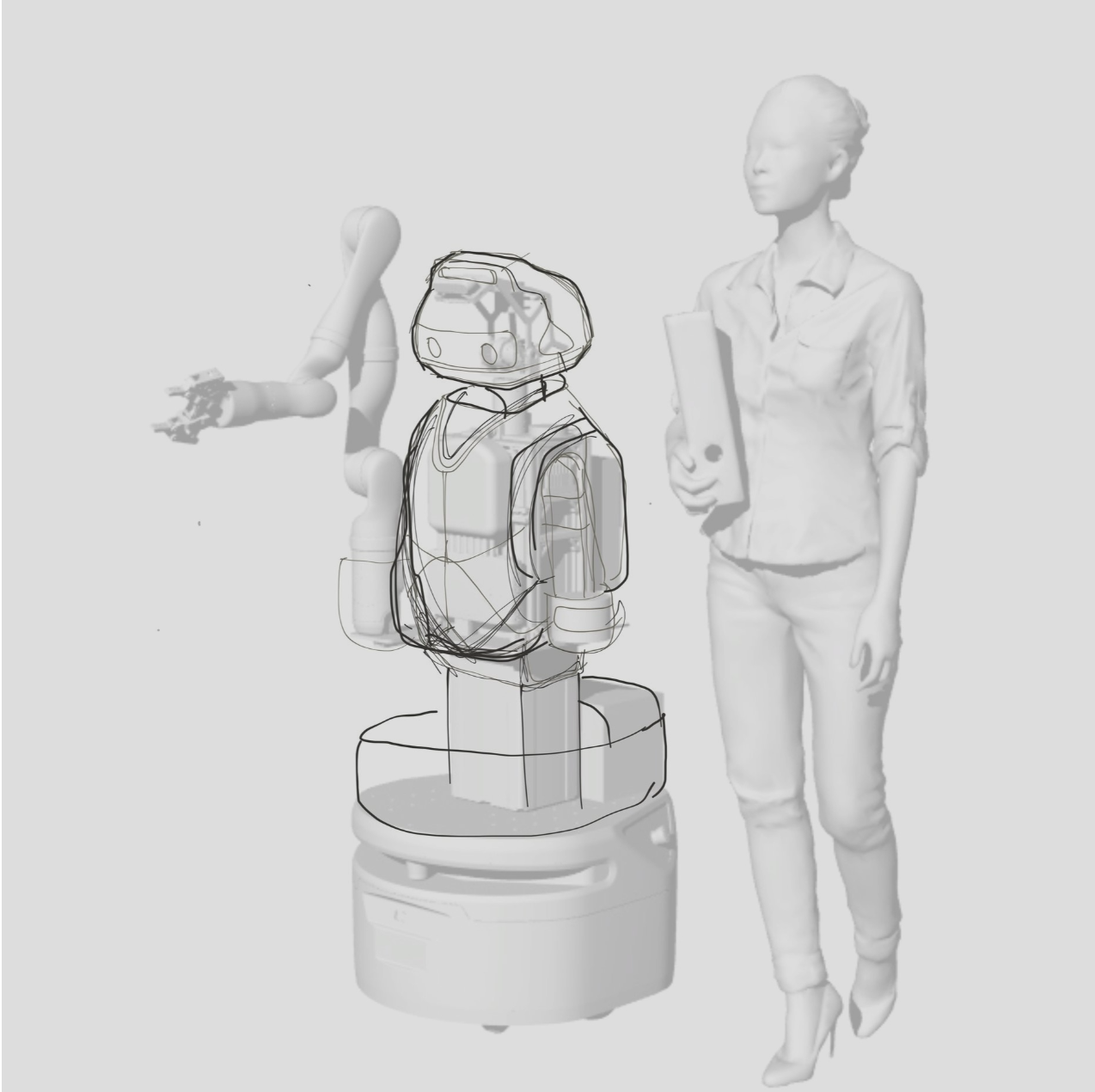

But the future requires more than just behind-the-scenes brute (and mute) automated task execution; it requires robots that don’t replace human jobs, but enhance them, by being robot teammates that do the parts of work that can be automated to give people more time for the vital, human-centric parts. The future requires robots that can operate in unstructured, variable and cluttered environments where robots have to continuously learn and be able to react to unexpected situations.

While cobots (collaborative robots that work with people) present significantly more complex technical challenges that industrial robots, the opportunities for cobots to enhance our human workplaces are limitless.

WHAT QUALITIES DOES A COBOT NEED TO HAVE?

For cobots to fulfill their mission as trusted, reliable members of human/robot teams, they must be able to operate in fast-paced and chaotic human environments. This requires the cobot to have a technical framework that allows it to adapt to variability using a variety of sensory inputs. In particular, we believe that multi-sensory input that integrates visual, auditory and haptic (touch) data is crucial for cobots to operate in human environments. Cobots that can modify their actions based on the environment have the skill of what we call “adaptive object manipulation”.

The second quality cobots must have is the ability to assist with the highly precise tasks that humans have to do such as picking up items of tiny sizes on varying shelf heights — or what is known as “dexterous manipulation.” Our nurse helper robot, Moxi, for example, has a high degree-of-freedom arm and sturdy gripper hand that allows it to pick up objects of various sizes.

And most importantly, we believe the key technical component cobots must have is the ability (and motivation) to learn from human teachers — or what we call “human-guided learning” or “ human-guided exploration.” In human environments, things are always changing and evolving. Just as humans are always learning how to navigate our changing environments and complete new tasks, cobots also need to have the framework that allows them to learn from their environment and from humans.

Examples of situations that cobots need advanced, learning-oriented technical framework are (using real situations Moxi has faced):

Moxi hears (using auditory input) unexpected ambulatory situation coming up behind, so Moxi turns around and sees (using visual data) a patient on a gurney being quickly wheeled towards it on the left side of the hallway; Moxi needs to learn that it needs to quickly move to the right side of hallway out of the way

Moxi needs to pick up a gauze pad to deliver to room 429, but realizes that it has never learned to pick up a gauze pad. Moxi then requests to be taught the crucial information to identify and manipulate a gauze pad. The teacher shows Moxi (using human-guided exploration) how to grasp the gauze pad. Meanwhile, Moxi records key information such as the weight (haptic), color (visual), and sound (audio) of the object as it picks up the gauze pad. Now whenever Moxi needs to deliver a gauze pad to any room, it knows what to expect. Furthermore, if Moxi were to ever see a white pad that looks like a gauze pad but is not quite the right weight, it can use this information to either adapt (picked up too many) or request information (the gauze pad has been replaced with wound dressings).

While we lose structure in human environments (which makes tasks very difficult for robots), we gain the help of people. When human teachers, the richest source of information, are added to the equation, tasks that previously were impossible for robots become possible. Understanding how human teachers can guide cobots and how cobots can learn from human teachers is the key ingredient to successfully integrating human-supporting cobots in the real world.

WHY ARE “COBOTS” IMPORTANT?

Humans have irreplaceable qualities that robots can never match; emotions, passions, intuition, drive, creativity, analytical skills, and the ability to build meaningful human relationships.

At Diligent, we get to see humans’ amazing ability to connect with others and problem solve every day. We see nurses comfort patients who are confused, frustrated and in pain. We see nurses simultaneously connect with their patients regardless of how shy or loud while also making sure everything is as expected according to their medical chart. We see nurses go the extra mile to check on even the smallest issues that they suspect might be a problem because they have what robots do not — human warmth and intuition.

The ability to deal with the unexpected, problem solve, and connect with others is what make us human; things technology cannot and should not replace. However, socially intelligent cobots like Moxi, can help humans with our increasing loads of busy work (nurses spend about 30% of their time on non-patient facing logistical work) so that people can spend more time on people (nurses with their patients).

Imagine if a cobot could help you with your busy work. And imagine if the cobot learned from you directly, the human it’s helping, to make sure it’s really helping you in the most efficient way.

What would you do with that extra time? Who could you have a positive, deeper impact on?

We’re proud to be part of a movement helping people have more time to utilize their important human-centric skills; starting with helping nurses.